Monitoring and Evaluation Planning

YOU ARE VIEWING DATA GUIDE #4 OF 5

Without Monitoring & Evaluation (M&E), you can’t know where to strategically make change and maximize the efficiency of your inputs. ASA’s Monitoring and Evaluation Planning Guide supports campus teams as you develop a plan to assess your student success strategies and initiatives — regardless of where you are in the assessment planning process. This workbook contains guidance and example templates to support each step of the M&E Planning process.

We encourage you to read through the content and guides to orient yourself to its contents. Then, with your team, decide where to dig in:

- Just Setting Out. Start at Step 1 and work your way through.

- In the Midst of Planning. Discuss with your team whether you have taken the pertinent design questions included in Step 1 into consideration to ensure an effective and efficient plan.

- Our M&E Plan is Complete. M&E plans are living and evolving. Review your current plan to ensure all considerations are addressed. What is going well? What might you have done differently? Stakeholder considerations, and dissemination and communication are good topics to focus on as these steps are of utmost importance but are sometimes given less time or priority.

This Planning Guide is intended to provide a broad overview of constructs and the spectrum of considerations to take into account as you create your plan. Aditional resources that detail various M&E frameworks are included at the end.

Please contact us if you need assistance as you develop your map or would like assistance in developing your Monitoring and Evaluation Plan to support your institution’s student success work.

Step 1. Where Do We Start?

When considering whether you need to develop a monitoring plan, an evaluation plan, or a combination of the two, understand some fundamental differences between monitoring and assessment:

| MONITORING | EVALUATION | |

| Answers the question: | Are we doing things right? | Are we doing the right thing? |

| Focuses on: | Improve efficiency. | Improve effectiveness and relevance. |

| Process: | Ongoing to assess if activities are on-track to targets. | Periodic to assess and measure success against objectives. |

| Level: | Operational | Strategic |

| Audience: | Program implementation or management team. | Broader, internal and external stakeholders. |

| Scope: | Effective monitoring can operate on its own. | Effective evaluation relies on monitoring. |

| Results & information used to: | Track and assess what has changed, including both the intended and the unintended. | Understand the reasons for changes, including facilitating and inhibiting factors; interpret the changes, such as perceptions and experiences; lessons learned |

To begin, respond to the questions in the planning template. Your Metrics and Activities Maps and Targets can help you to respond to these questions.

As you develop your plan, be sure to consider:

- What do we want to learn? What are you trying to answer or achieve with the assessment?

- What else is going on? Understand the influences of policies and strategies and the structures that affect implementation. Also consider other assessments that will be conducted at the same time which may either support or conflict with your assessment, or limit the capacity of those involved.

- What resources do we have? Consider the available resources, both financial and human, and the amount of time needed for an effective assessment.

- Who are the stakeholders? Consider all appropriate stakeholders — internal and external — and decide how to involve them during assessment design, implementation, analysis, reporting and communications. What are their various assessment information needs?

- Be flexible! Programs and strategies change during their life cycles; a relevant and effective monitoring and evaluation plan needs to as well.

Step 2. Define Indicators

“What gets measured gets improved.”

PETER DRUCKER

Indicators should be:

- Student-centric: What is the related student outcome or behavior you wish to see?

- Measurable: How can you measure it?

- Actionable: What action can you take from the resulting data?

- Aligned with strategy: Do the indicators support strategic decisions?

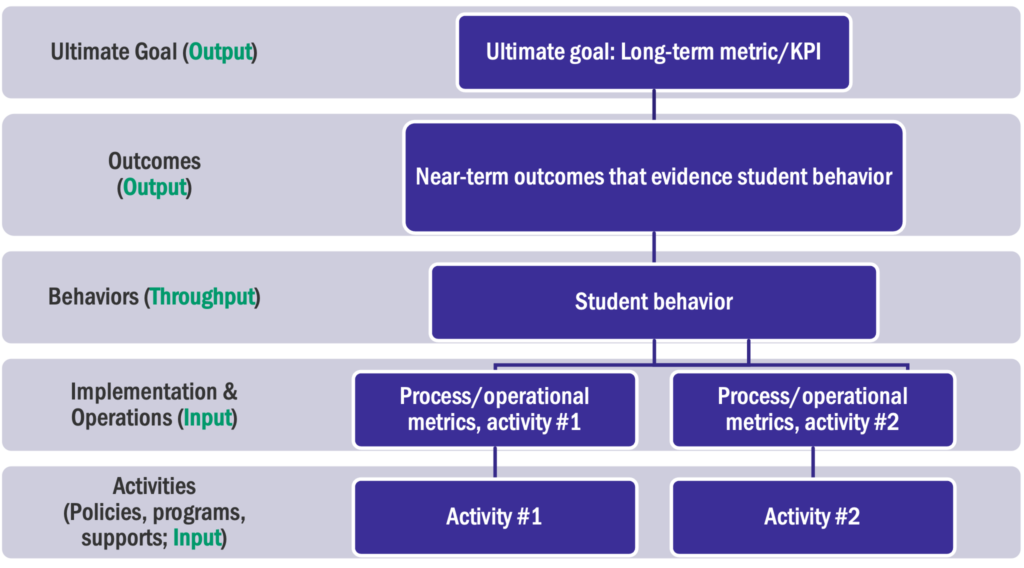

Identify what is to be measured and for what purpose. Define indicators that can track progress toward achieving the goals stated in Step 1. Indicators should measure process, outcomes and support lessons learned. Use your Metrics and Strategy Maps to help identify indicators. Note, the metrics you identified on your Maps may not be exactly the same ones used for monitoring or evaluation. Consider each metric and what action you will be able to take once you see the results — only include actionable metrics that will help support assessment and decision making.

Which indicators support monitoring progress toward targets, or answer, “Are we doing the work right?”

Which indicators support evaluation and strategic decision making, or answer, “Are we doing the right work?”

What additional indicators or data do we need? Quantitative? Qualitative?

Define your indicators and complete this planning template.

Step 3. Define Data Collection Methods, Schedule, Roles & Responsibilities

For each of the indicators identified in Step 2, identify the data source and availability, frequency of collection, and the responsible party. Complete the planning template. Consider a few tips while doing so:

- Discuss what data needs to be monitored and evaluated with all stakeholders.

- Indicators should be objective, verifiable, consistently defined and operationalized, and easily understood.

- Don’t forget qualitative data sources!

Step 4. Create an Analysis and Reporting Plan

Once the data are collected, include plans for how to compile, analyze, and report the data, as well as who is responsible to do so. The analysis and reporting plan contain specifics about any computations, methodologies, statistical tests, along with templates for presentation. Complete the data collection planning template.

Sample Reporting Templates

Monitoring plans include targets and illustrate progress toward the target. A simple illustration follows. Consider graphical displays to best illustrate progress toward targets.

| INDICATOR | BASELINE | TIME PERIOD 1 | TARGET | % TARGET ACHIEVED |

| Example: Number of faculty participating in and completing online teaching training. | 0 | 30 | 150 | 20% |

An evaluation plan using quantitative data may include outcomes comparisons of the treatment and the non-treatment groups, or on pre/post-treatment measures. Qualitative data to support an evaluation plan may result in themes identified via focus group findings, for example, exploring learning acquisition from the faculty training, why some faculty did not participate in training, or the value seen in the training. An example of a quantitative display is below. See the planning template to complete the form.

| INDICATOR | BASELINE | POST-INTERVENTION | % CHANGE | COMPARISON GROUP | % DIFFERENCE, TREATMENT & COMPARISON GROUP |

| Example: Number of faculty participating in and completing online teaching training. | 10 | 30 | 200% | 25 | 17% |

Develop a baseline, and if possible, a control group, at the beginning.

If it is not possible to collect baseline at the beginning, consider a retrospective collection. For qualitative data, perspective, ask students to compare a situation before and after.

These sample report templates are provided in the Planning Templates for your use.

Step 5. Dissemination & Communication

Don’t keep it a secret! All stakeholders need monitoring and evaluation information so they can act.

Be deliberate about your reporting efforts to ensure the right information gets to the right team members so appropriate adjustments can be made when needed. Consider the following:

- Who are the stakeholders? I.e., Who needs the monitoring/evaluation reports and data — the program team, faculty, staff? Do results need to be shared to students or the community? How often or at what point in the strategy?

- What monitoring/evaluation data will be the most effective to inform staff and stakeholders to help make modifications and course corrections, or make decisions about strategy or policy?

- How can the lessons learned be applied in a meaningful way for various stakeholders?

- Consider whether research findings may inform the field and be applied for replication in other institutions or programs across the state or country? If so, how and what should be communicated?

- Operationally, what format will be most effective for each audience (dashboard, briefing, analytical report, formal evaluation report, infographics, memo, etc.)? Delivery (print or electronic)?

See ASA’s Data Communications Planning Guide for tips while developing your monitoring and evaluation reporting communication plan.

The communications planning templates is provided in the Planning Templates for your use.

Evaluation Frameworks and Methodologies: Recommended Resources

- Center for Culturally Responsive Evaluation and Assessment (CREA) https://crea.education.illinois.edu/

- Fetterman, D.M., Rodriguez-Campos, L., & Zukoski, A.P. (2018). Collaborative, Participatory, and Empowerment Evaluation: Stakeholder Involvement Approaches. New York: Guilford Press.

- Hutchinson, K. (2017). A Short Primer on Innovative Evaluation Reporting. Www.communitysolutions.ca

- Mertens, D.M. & Wilson, A.T. (2019). Program Evaluation Theory and Practice. New York: The Guilford Press.

- Patton, M.Q. (2008). Utilization-Focused Evaluation. Los Angeles: Sage.

- Thomas, V.G., & Campbell, P.B. (2021). Evaluation in Today’s World: Respecting Diversity, Improving Quality, and Promoting Usability. Los Angeles: Sage.